Introduction

Few parts of the MDR have sparked as much discussion as Rule 11. This is not surprising. Software fundamentally defies traditional methods of categorizing medical devices into established groups. Each software device is unique, impacting clinical decision-making in very specific ways. Indeed, the diverse nature of software devices was one of the main reasons behind the EU Commission’s decision to adopt the general safety and performance approach proposed by the IMDRF. This approach recognizes that nowadays it has become impossible to individually regulate each medical device group. Instead, common principles based on good scientific practices must be established that apply to all devices. Another issue is that, at the time of writing, Rule 11 effectively exists in two distinct forms. The first is the text of the rule itself and the interpretation provided by the MDCG. The second is how the rule is actually applied in the field. In principle, these two approaches should align. In practice, they do not. The reason is straightforward: implementing Rule 11 as intended represents a monumental shift for the medical technology field. Regulators are aware of this and have been cautious about pushing in the practice a change that could potentially stifle an expanding field. Achieving full application and a common understanding could take decades, similar to the pharmaceutical industry’s shift to evidence-based medicine 50 years ago. This discrepancy between theory and practice makes discussing Rule 11 challenging, as real-world applications often diverge significantly from theoretical intentions. For this reason, we have decided to divide this guidance into two parts. In Chapter 1, we explore the “theory” of Rule 11. We show that applying rule 11 means assessing the transfer of responsibility from healthcare professionals to standardized instructions— that is, the software. The perspective provided in this first section is essentially where we anticipates the application of the rule to evolve over the next years. In Chapter 2, we analyze the “reality” of the application of Rule 11 on the market, providing practical tips and warnings with an eye to the future. Given the rapid changes in the field following the release of the MDR, what is standard practice today may soon be outdated.The theory

Rule 11 consists of three parts. The first part provides the general classification rules for software with diagnostic and therapeutic purposes. The second part provides specific rules for the classification of software for monitoring of physiological process and vital physiological parameters. The third and final part stipulates that any devices not covered by the first two sections are classified as Class I. We will analyze each part separately. The first part of Rule 11 states Software intended to provide information which is used to take decisions with diagnosis or therapeutic purposes is classified as class IIa, except if such decisions have an impact that may cause:- Death or an irreversible deterioration of a person’s state of health, in which case it is in class III,

- A serious deterioration of a person’s state of health or a surgical intervention, in which case it is classified as class IIb.

Information that is used to take decisions

The first part of MDR Annex VIII Rule 11 begins with the words “Software intended to provide information which is used to take decisions…”. These words highlights a crucial aspect of how medical device software works, namely by providing information to the user. Here we intentionally speak of “user”. In theory a machine could also make use of the output of medical software to take automated decision concerning patient management. In this case, however, MDR speaks of “closed loop systems”, the classification of which is covered in Rule 22.Important: User vs. patient User” and “patient” are distinct roles, not necessarily distinct people. Both roles can be covered by the same physical person. Consider, for example, an app that provides a physiotherapy excercises plan to the patient. In this case the patient is also a user. However, the opposite is also possible. Consider, for example, FFP3 masks. They are used by the healthcare professional, and protect the patient as well as the the healthcare professional from infection risk.What does it mean that a software “provides information that is used to take decisions”? It means that the information provided by the software can influence patient management (see also box below). Why does this matter? In most countries, medical practice is strictly regulated. Only individuals who have undergone years of training can intervene in clinical decision-making. These professionals—doctors, nurses, physiotherapists, pharmacists, dentists, phychologists, radiologists, etc—are tasked with the clinical management of patients. Software that impacts clinical decision-making takes on clinical responsibility—fully or partially—by encoding some of these medical tasks into a set of instructions1 . Similarly to how governments require healthcare professionals to undergo necessary training to manage medical responsibilities, they also require that software assuming medical responsibility meets specific requirements

Important: Patient management It will be obvious to most, but it is worth emphasizing this point to avoid misunderstanding. When the MDR refers to “patient management,” it does not refer to managing patient data for administrative purposes. Instead, “patient management” means the comprehensive coordination and oversight of a patient’s clinical care, which includes diagnosis, treatment, and follow-up.Before analyzing how software influences clinical decision-making, let’s examine two examples of software that do not affect clinical decision-making. Consider a software application that enables patients to remotely discuss their symptoms with their doctor to determine if they need to stay home from work for medical reasons. During the call, the doctor performs a clinical evaluation (anamnesis), assesses the symptoms as consistent with the flu, and decides that an in-person visit is unnecessary. The doctor then issues a digital medical excuse for work absence and schedules a follow-up consultation. In this scenario, the software solely facilitates communication and does not engage in any medical decision-making. All critical decisions—from conducting the anamnesis to determining the necessity of follow-up visits—are made by the licensed healthcare professional. This software provides a platform for a conversation like the one that could otherwise take place in the doctor’s office.

Important: Telemedicine As we will explore below, just because software is classified as “telemedicine” does not mean it cannot influence clinical decisions. Consider the previous example, where the consultation involves inspecting a patient’s skin using a camera image. An inaccurate image could lead the practitioner to make an incorrect clinical decision. If the software allows this kind of application, it becomes the manufacturer’s responsibility to ensure that the images collected by the softare is suitable for diagnostic purposes.Consider another example: A software that enables doctors to digitally collect and store clinical data. The doctor conducts the anamnesis and stores this alphanumeric data in a database for later retrieval in its original form. The software does not modify or process the data; it merely stores them for later retrieval, effectively replacing traditional paper records. In this scenario, the software does not assume any medical responsibility nor does it perform any medical decision-making functions; it simply facilitates data storage. Every decision—what information to collect, how to interpret it—is made by the licensed healthcare professional.

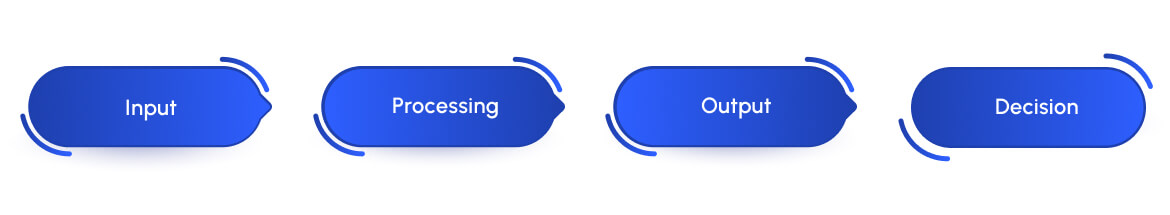

Important: Storage and retrieval When MDCG 2019-11 specifies that storage and retrieval do not constitue medical purpose it refers to the technical action of storing and retrieving the data. The MDCG is not referring to the action of deciding what must stored or retrieved. See also the section on cinical input below.To analyze how software can influence clinical decision-making, we examine the workflow depicted in Figure 1.1. In traditional clinical decision-making, healthcare professionals start by collecting clinical information from patients (input). They then analyze this data to discern key details and patterns (processing). To understand the output phase, consider a healthcare professional who seeks additional insights from a colleague’s expertise. This professional communicates their findings using values, words, texts, and visuals. The final phase (decision) involves the actual decision-making process, where the professional determines the diagnosis and treatment plan for the patient. In the remainder of this section, we explore how software can impact each step in this chain

Clinical input

Software can impact the content, timing, or quality of the clinical input.- Content. Software can influence the content of clinical data by determining which information is gathered for patient management. Consider a dermatology software that conducts patient anamnesis and sends this information to one of the dermatologists in its database for diagnosis or therapy decisions. In this case, the software, not the doctor, determines the anamnesis content. It does not matter whether the anamnesis consists of static questionnaires or dynamically adapts to individual patients. The software might request too little, too much, or simply incorrect information for a specific patient’s condition, potentially leading the dermatologist to make an erroneous clinical decision.

Important: Paper questionnaires It has been suggested that, based on the above analysis, all paper or electronic questionnaires used in medical practices would qualify as medical devices. Clearly, this is not the case. When you visit a doctor and are handed an anamnesis questionnaire to complete, it is under the doctor’s responsibility. However, in the scenario described above, the software determines the content of the electronic questionnaire before the contact with the doctor. Thus, it is not the doctor who decides which questionnaire to administer, but the software itself. It is this key difference that signifies the transfer of medical responsibility from the healthcare professional to the software.

- Timing. Software can influence the content of clinical data by determining which information is gathered for patient management. Consider a dermatology software that conducts patient anamnesis and sends this information to one of the dermatologists in its database for diagnosis or therapy decisions. In this case, the software, not the doctor, determines the anamnesis content. It does not matter whether the anamnesis consists of static questionnaires or dynamically adapts to individual patients. The software might request too little, too much, or simply incorrect information for a specific patient’s condition, potentially leading the dermatologist to make an erroneous clinical decision.

Important: Reminders Software that reminds patients of scheduled doctor visits does not assume responsibility for the timing of the input, as the decision on when to conduct the visit remains with the healthcare professional.

- Quality. Software inflluences the quality of the input when it affects how the data is collected. For example, a software could instruct a patient to take a picture of a body location, providing feedback to the patient to improve the picture quality. Alternatively, a software can instruct patients on whether the electrodes of an ECG system have been properly applied to ensure the correct signal-to-noise ratio. In traditional medical praxis, a healthcare professional would evaluate the picture or the ECG signal live and guide the patient on any necessary adjustment. A software that takes over the role of guiding the patient to the correct input also takes over the responsibility that comes with this role.

Processing

Processing is perhaps the most intuitive aspect of the information workflow where the impact of software on clinical decision-making becomes evident. Medical software commonly processes input data to reveal insights that are not obvious in the raw data. This processing can take several forms:- Calculation of medical scores: Medical software frequently involves the computational generation of scores based on patient data, which are used to guide clinical decisions. These scores, such as risk assessments for cardiovascular diseases or scoring systems for the severity of chronic illnesses, are developed through extensive research and validation involving large patient populations.

Important: Combining scores In the medical literature, most scores are validated individually. The medical field exercises caution when aggregating scores. The guiding principle is that the fact that each scores works individually does not mean that several scores will also work in combination.

- Feature Extraction, Pattern Recognition, Data Segmentation, etc: This process involves algorithms designed to identify specific patterns or features within complex datasets. Here some examples. In cardiology, software analyzes ECG (electrocardiogram) traces to detect abnormalities like atrial fibrillation by identifying irregularities in heart rhythm patterns. In radiology, advanced algorithms analyze imaging data to detect and classify tumors in MRI or CT scans. In dermatology, image analysis software evaluates skin lesions from photographic images, using pattern recognition to suggest potential diagnoses, such as distinguishing between benign moles and malignant melanomas. In ophthalmology, software tools segment retinal scans to identify and classify regions of interest, such as blood vessels or indicators of diabetic retinopathy, aiding in the early detection and management of eye diseases

Clinical output

Software can impact the content, timing, or quality of the clinical output.- Content. Software can influence decision-making by determining which information to show to the user. For example, a clinical decision support system might filter and highlight critical patient data during emergency situations to assist clinicians in making rapid decisions. By selecting which information to display the software can affect the healthcare professional decision.

Important: Information overload The principle “the more, the better” seldom applies in clinical decision-making, where an overload of information can overwhelm the user, leading to poorer decisions compared to when less information is provided.

- Timing Software influences data output when it determines when to present the clinical output to the user. For example, a patient monitoring system used in intensive care units might decide the frequency at which updates on vital signs are displayed to healthcare professionals, or it might determine when to issue alerts if a patient’s condition changes suddenly. By setting these parameters, the software plays a crucial role in how and when interventions are made, directly impacting patient care outcomes.

- Quality Software can impact (intentionally or unintentionally) the quality of the collected clinical data. For example, a software for telemedicine might render images in a way that makes it impossible for healthcare professionals to recognize certain details. Alternatively, a software might present the ECG signal in a format that does not allow a doctor to identify anomalous patterns.

Decision

Software can impact the quality of collected clinical data, whether intentionally or unintentionally. For instance, telemedicine software may process images in a manner that prevents healthcare professionals from recognizing specific details. Similarly, software might display ECG signals in a format that obstructs a doctor’s ability to detect anomalous patterns. Important: The role of informational materiall A point that is often forgotten is that software can influence clinical decision-making even through its informational material The informational material provided with a medical device is an integral part of the device. A manufacturer may thus influence clinical decisions not only through the information provided by the software but also through the information provided with the software. For example, if a manufacturer claims a specific clinical benefit, this may lead a healthcare professional to prescribe that particular device. In such cases, the software device is affecting clinical decisionmaking through the information material. In instances where software provides outputs or elements that are further processed by healthcare professionals to make the final decision, the presence of these professionals does not automatically absolve the software of responsibility. In some cases, once the software has processed information, it may not be possible for the healthcare professional to correct any errors introduced. For example, if software processes an image and loses critical details, this missing information may go unnoticed by the healthcare professional, leaving them unable to attempt recovery of the lost details. Important: Software that “performs” the therapy It has been suggested that software that “performs” the therapy does not “provide information that is used” for therapeutic purposes. This reasoning is flawed for the simple reasons that software cannot “perform” therapies. This is a striking difference with physical appliances that provide therapy directly to the body, such as defibrillatory, radiation therapy systems, insuling pumps, ect. As discussed earlier, the mode of action of software is through information. The only thing software can do is present the therapeutic plan information to the patients, who then implement it in their therapy.Purpose matters!

We conclude the analysis of how software can affect clinical decision-making with an important remark. Merely having the potential to impact clinical decision-making does not qualify software as doing so. The manufacturer must “communicate” the idea that the software is suitable for a medical use, either directly—such as through its stated intended purpose, or claims—or indirectly—via marketing materials or other forms of written or oral communication Consider the following scenario. A dermatologist utilizes a commercially available video calling application, such as Zoom or WhatsApp, to assess a patient for a butterflyshaped rash across the cheeks and nose, which is indicative of lupus. These applications significantly process images—they compress images to minimize bandwidth and often apply filters to enhance visual appearance. As a result, there is no guarantee that the images displayed by these applications maintain the necessary quality for a dermatologist to discern subtle differences in skin conditions. Nevertheless, applications like Zoom or WhatsApp are not designed for telemedicine or medical diagnoses, nor are they sold for this purpose. Using them for such purposes is entirely at the discretion and risk of the healthcare professional. This situation is similar to when a healthcare professional decides to use a medical device off-label However, if a software manufacturer claims or implies that their software can be used for dermatological assessments, they must then demonstrate that the software meets the necessary performance and safety requirements for this specific purpose. Should a manufacturer choose to integrate platforms like Zoom or WhatsApp as components of their telemedicine system, it becomes their responsibility to ensure and prove that the image quality and processing capabilities of these platforms are adequate for the intended medical use.Diagnostic and therapeutic purposes

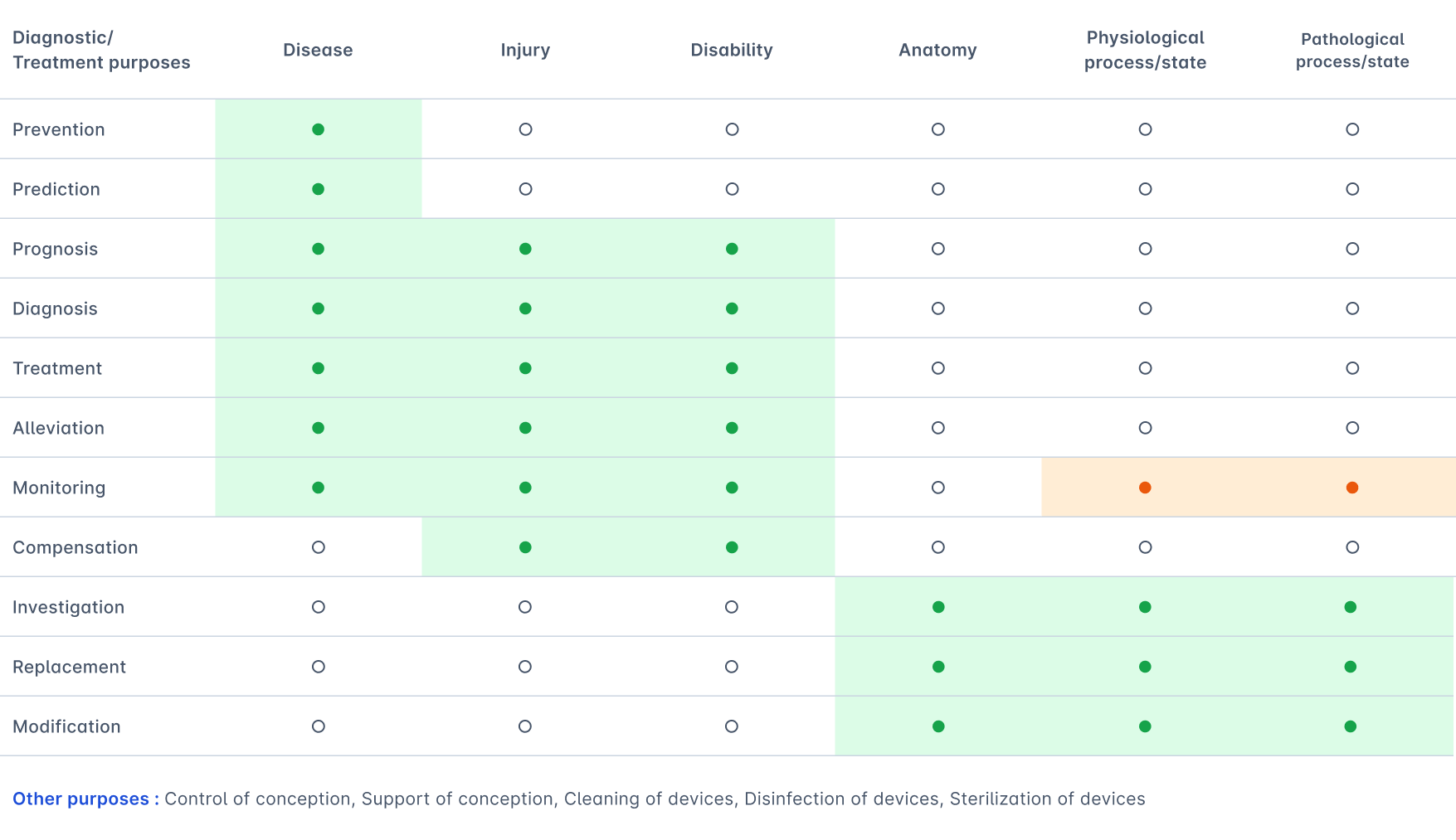

The formulation of Rule 11 further specifies the nature of the decisions covered by the rule as “decisions with diagnostic or therapeutic purposes.” This specification is important since we can classify the medical purposes specified in the MDR Article 2 into diagnostic/therapeutic and “other” purposes. We summarized this classification in Table 1.1. Rule 11 applies only to the diagnostic and therapeutic purposes. This interpretation aligns with MDCG 2019-11 and MDCG 2021-24.

Higher risk decisions

The first part of Rule 11 concludes with two exceptions. The phrasing of these exceptions begins with the words “except if such decisions have an impact that may cause:…”. This formulation suggests that the crucial factor in determining whether the exceptions apply is the diagnostic or therapeutic decision, regardless of the extent to which the software contributes to the decision-making process. This approach in the software classification system of the MDR, therefore, appears to diverge from that proposed in IMDRF/SaMD WG/N12, which considers both the the clinical decision and the software’s contribution to that decision. However, MDCG 2019-11 proposes interpreting this aspect of Rule 11 in a manner that aligns with the IMDRF classification. Yet, the acceptance of the MDCG interpretation might vary depending on the notified body. Indeed, a notified body might argue that if the European Commission had intended to align this section of the regulation with the IMDRF methodology, they would have explicitly done so, as they have in other sections of the regulation. Consider for example the CHA2DS2-VASc calculation. This score provides information which is used to take therapeutic decisions, specifically, whether to administer anticoagulants to a patient to prevent stroke. A wrong decision may cause a serious deterioration of a person’s state of health or a surgical intervention. Strictly interpreting Rule 11, therefore, could lead to classifying a software that implements this score as class IIb. However, the software only partially contributes to the decision, therefore IMDRF classifes this tool as IIa because it “is not normally expected to be time critical in order to avoid death, long-term disability, or other serious deterioration of health 2.” Finally, MDCG 2019-11 provides elaborates on what constitutes a “serious deterioration in state of health of a patient, user or other person, as including the following:I. a life-threatening illness or injury II. permanent or temporary impairment of a body structure or a body function (including impairments leading to diagnosed psychological trauma), III. a condition necessitating hospitalisation or prolongation of existing hospitalisation, IV. medical or surgical intervention to prevent I or II, examples of this can be: • professional medical care or additional unplanned medical treatment • a clinically relevant increase in the duration of a surgical procedure V. a chronic disease, VI. foetal distress, foetal death or any congenital abnormality (including congenital physical or mental impairment) or birth defects.

Part 2

The second part of Rule 11 focuses on the classification of monitoring software. It states that:When MDCG 2019-11 specifies that storage and retrieval do not constitue medical purpose it refers to the technical action of storing and retrieving the data. The MDCG is not referring to the action of deciding what must stored or retrieved. See also the section on cinical input below.

As noted in MDCG 2019-11, this rule “was introduced to ensure that medical device software which has the same intended purpose as (hardware) devices which would fall under Rule 10, third indent, are in the same risk class.” However, unlike Rule 10 third indent, this par of Rule 11 applies to software intended to be used for monitoring any/all physiological processes and not just vital physiological processes. What is the difference? Physiological processes refer to the functions and processes occurring within the body. Examples include heart rate, blood pressure, body temperature, oxygen saturation, respiratory rate, muscle strength, metabolic rate, and hormonal levels. Parameters that can be measured concerning these processes are called physiological parameters. Monitoring a physiological process or state means continuously or periodically measuring and tracking these parameters to observe trends, detect abnormalities, and eventually alarm the user of significant changes that may indicate a need for medical intervention. According to MDR, the presence of alarms is compulsory in the case of situations which require intervention or that could lead to death or severe deterioration of the patient’s state of health3. A vital parameter is a subclass of physiological parameters that involves processes essential for sustaining life, such as heart rate, respiratory rate, blood pressure, body temperature, and blood oxygen levels. Crucially, it is nearly impossible to provide an exhaustive list of vital physiological parameters as their significance can vary widely depending on the clinical context. This context includes the patient’s overall condition, existing comorbidities, and the specific medical setting or scenario. For instance, for a patient with a heart condition taking anticoagulants and experiencing a gastric ulcer leading to a digestive hemorrhage, monitoring the International Normalized Ratio (INR) is vital. A high INR would lead doctors to treat for anticoagulant overdose, whereas a normal INR would suggest that surgical intervention is needed. In this context, INR monitoring becomes crucial as it directly influences the therapeutic decision. However, when a patient is on anticoagulants due to chronic stable heart disease, monitoring INR, while important, is not classified as “vital.” The immediate risk of life-threatening complications is lower, and the focus is on maintaining therapeutic levels to prevent thromboembolic events. The same emphasis on context is necessary to asses whether variations of those parameters are such that it could result in immediate danger to the patient. Indeed, the class IIb classification is open to interpretation only if the medical context is not considered. Devices monitoring vital parameters in contexts where timing is critical to medical decisions should be in a higher risk class. For instance, in an emergency or resuscitation setting, the accuracy of a blood pressure monitor is crucial because it directly influences life-saving decisions. Hence, such devices are classified as Class IIb. On the other hand, a blood pressure monitor used at home for routine monitoring doesn’t have the same immediate impact on medical decisions, so it is classified as Class IIa. This distinction ensures that devices are appropriately regulated based on their intended use and potential impact on patient safety.Part 3

The final part of Rule 11 simply states that “All other software is classified as class I.”, meaning that any software not covered by the first two sections is considered low risk. According to our analysis and as noted by MDCG 2019-11 and MDCG 2021-24, an example of software that does not fall under the first two parts is software intended to support conception (note that software intended to prevent conception is covered under Rule 15).Conclusions

It has been suggested that Rule 11 is incomplete, inconsistent, and assigns classes to medical device software that are excessively high compared to other devices. However, this criticism is unfounded. Firstly, Rule 11 is not incomplete. Instead, as we have seen above, it allows classifying devices across essentially all medical purposes covered by the MDR. Secondly, the rule is not inconsistent. The critique that Rule 11 inappropriately mixes diagnostic and therapeutic purposes stems from a misunderstanding. Rule 11 intentionally groups these functions together because, in the realm of medicine, the line between diagnostic and therapeutic purposes can often be blurred—software that assists in diagnosis frequently plays a critical role in guiding therapy. Thirdly, it is uncomprehensible how Rule 11 is supposed to penalize medical software with respect to other medical devices. The time of cathode tube monitors is long gone. Today, almost any medical device that supports clinical decision-making or that monitors parameters incorporates some form of medical software. It is a common misconception that Rule 11 applies exclusively to stand-alone software. In reality, Rule 11 covers any software with medical purposes, regardless of its implementation—whether operating in the cloud, on a computer, on a mobile phone, or as integrated functionality within a hardware medical device. This is a striking difference from the IMDRF classifiction of SaMD, which only applies to software that is not part of a hardware medical device.